Introduction

When metrics are enabled Cloud Functions will publish metrics around the results to TrustX's metric system. Metrics from cloud functions can be used to record information about the results obtained when executing cloud functions.

This guide will demonstrate how to configure a Cloud Function to record the count of a result and expose this information to the activity within the Process Designer.

What Metrics Can Be Recorded?

Cloud Functions currently support the recording of explicit and implicit metrics, described by the following definitions:

- explicit metrics - where the writer of the cloud function defines what metrics to set.

- implicit metrics - derived from the ‘outcome’ of existing checks.

Metrics that have been selected for recording can be exposed as a counter representing the number of times an outcome has occurred. The supported outcomes that can be recorded are as follows:

- approve

- decline

- review

- na

- failed

- passed

- not_found

Outcomes that are not listed above will not be eligible for recording.

How to Configure Cloud Function Metrics

Step 1 - Configure the Cloud Function

This section will describe how to configure a Cloud Function to record the count of times an outcome has occurred. Metrics are represented by a key-value pair contained within a JSON object. The value associated with each pair will be set to one of the seven outcomes listed above.

import jsonfrom cfresults import Outcome, Result, OverallResult, OverallResultEncoderoverallResult = OverallResult(Outcome.APPROVE, {})cfResults = json.loads(OverallResultEncoder().encode(overallResult))results["cfResults"] = cfResultsmetrics = {"counters": {"metric1":"not_found", "metric2":"approve", "metric3":"na"}}results["metrics"] = metricsIn the example Cloud Function above, the metrics dictionary is used to count the "not_found" results as "metric1", "approve" results as "metric2", etc.

metrics - The variable name of the metrics dictionary that will contain the metrics data.

"counters" - The name given to the JSON collection contained within the dictionary.

"metric1", "metric2", "metric2" - The name used to identify the type of outcome. This can be any meaningful name that reflects the metric.

"not_found", "approve", "na" - The outcome to be recorded.

Next, it is important to configure the 'Execute Cloud Function' activity within the Process Designer to pass the metrics defined in the dictionary to the summary report.

Step 2 - Configure the Activity

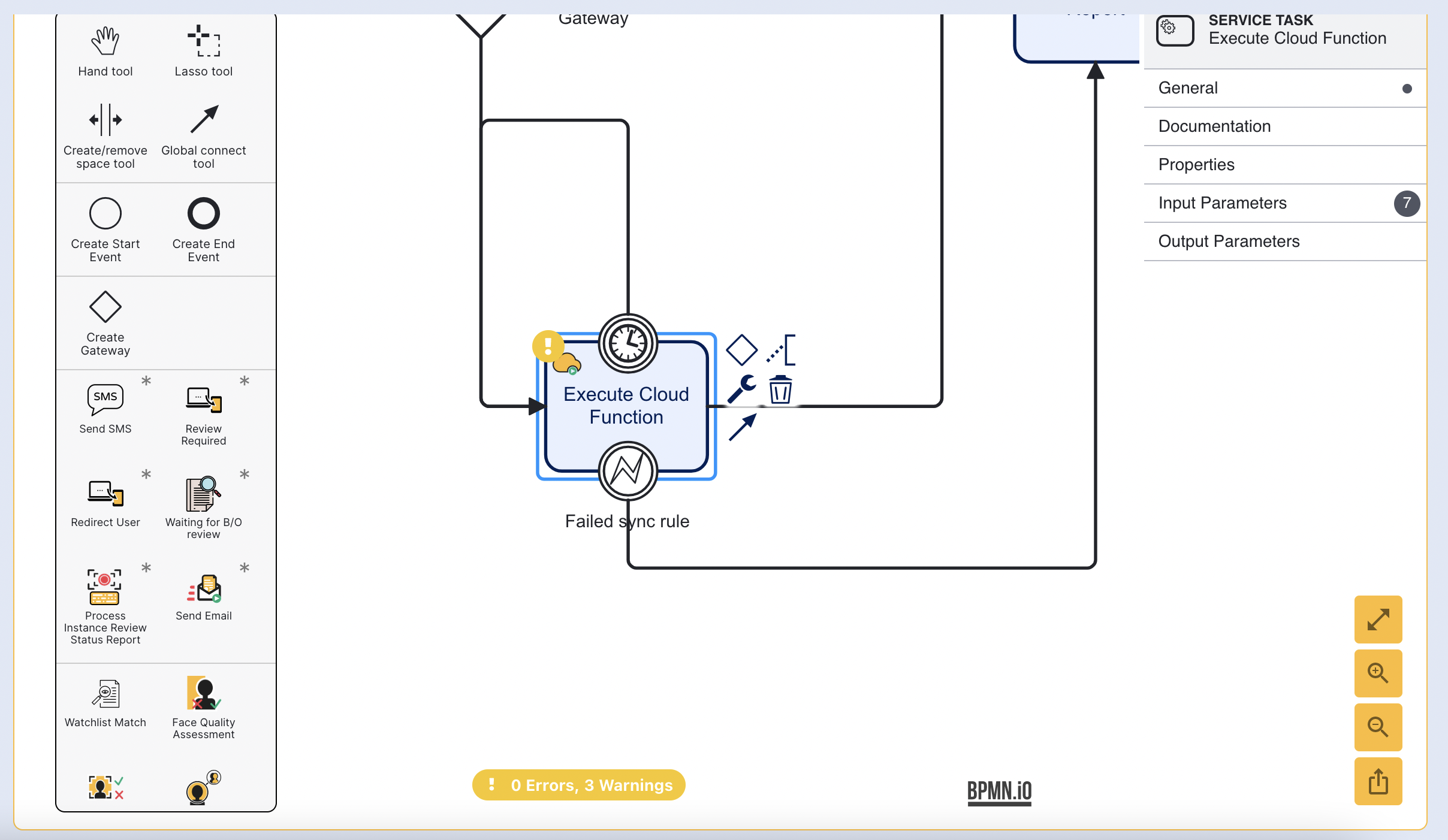

In the Process Designer, add the 'Execute Cloud Function' activity to the flow. For more information on working with the Process Designer, see the Cloud Functions and Process Definitions guide.

Once the Cloud Function activity is configured, select it to open the right-side contextual menu.

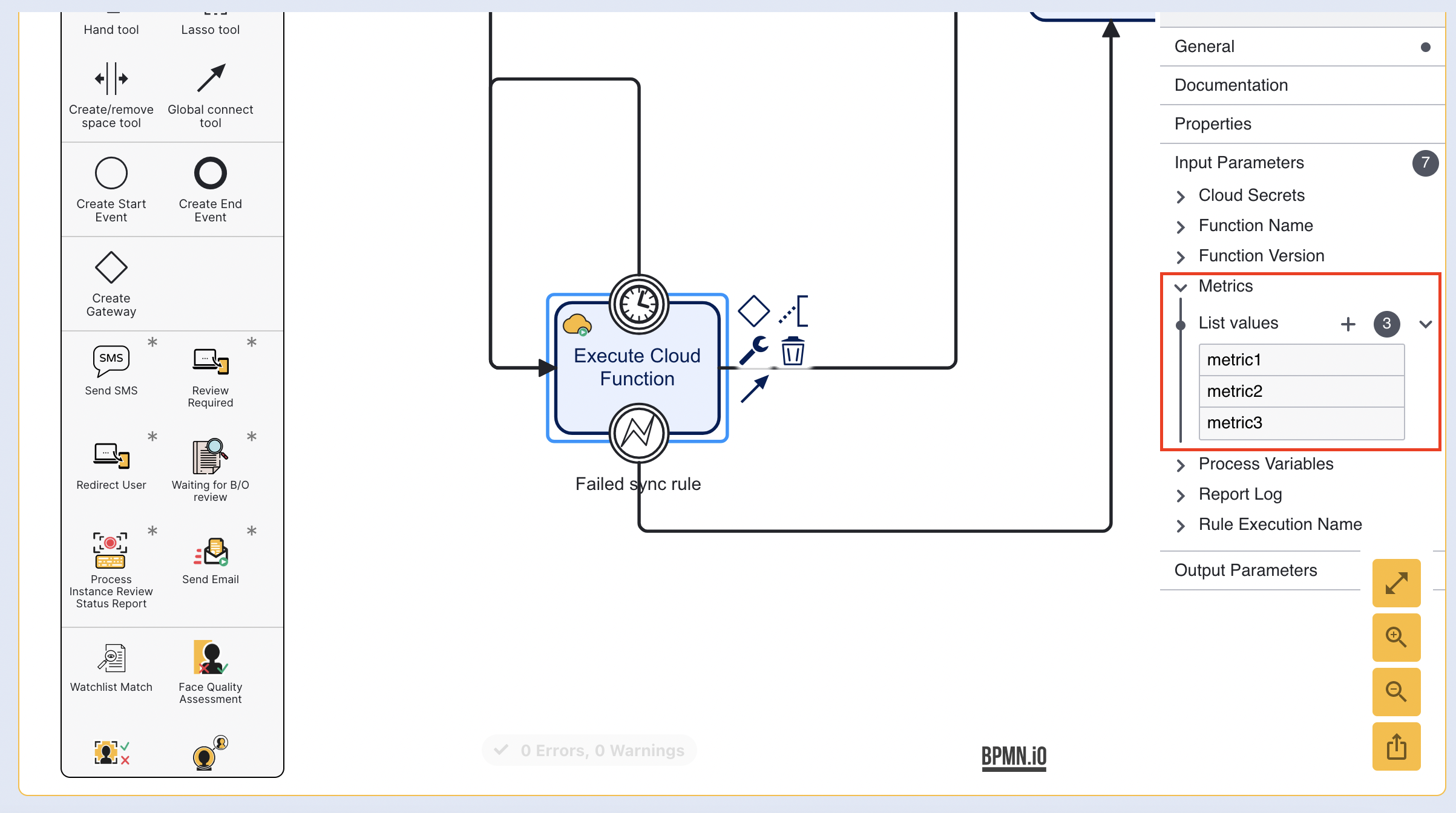

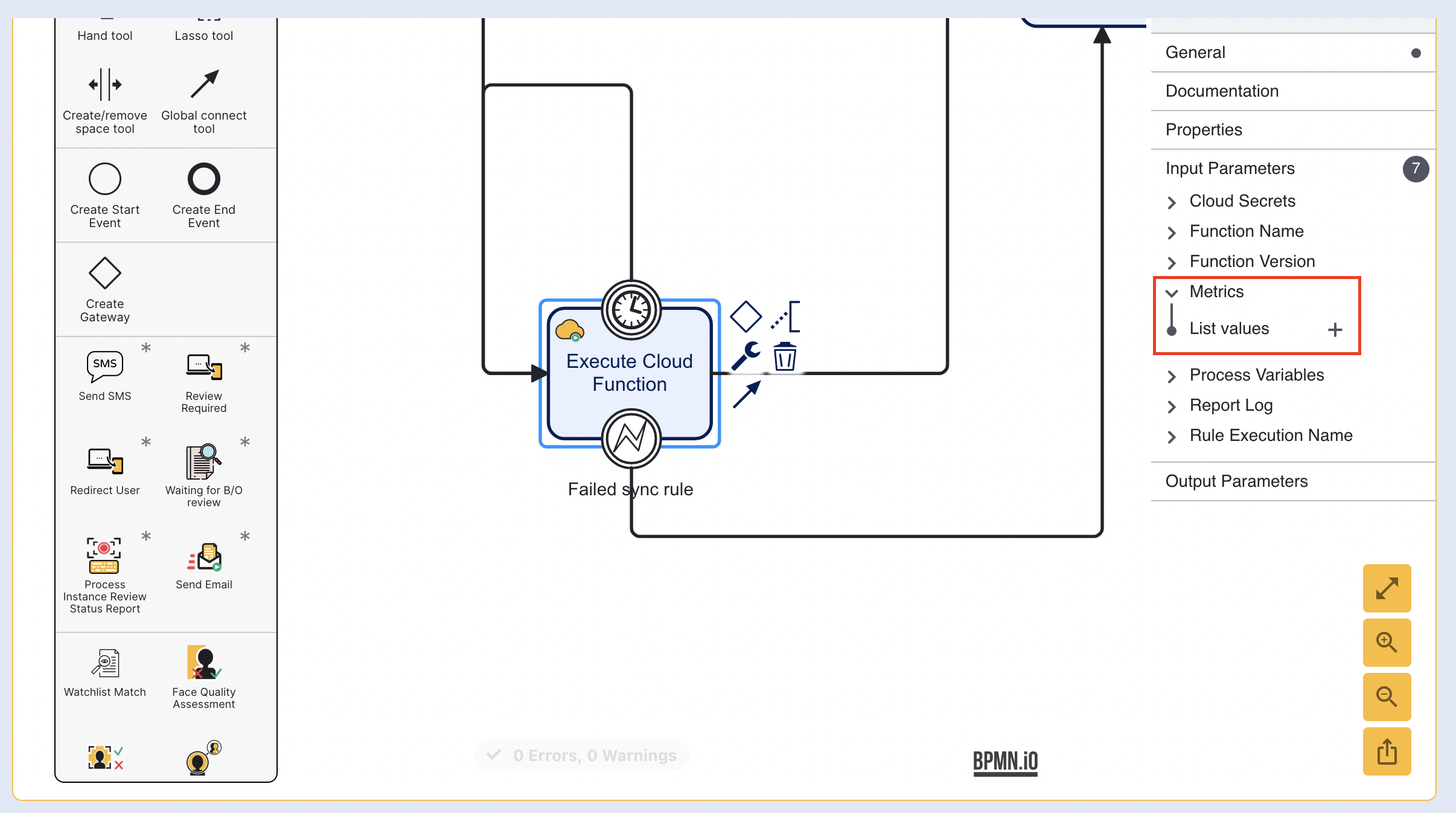

Expand the input parameters option and find the 'Metrics' option.

The 'List Values' will include all metrics that have been defined within the 'Metrics' dictionary. In the example outlined in Step 1, these values are 'metric1', 'metric2', and 'metric3'.

Any value not included in the 'List values' collection will not be sent to the summary report. As a result, it will not be possible to use this metric when creating visualisations of the records.

In order to publish metrics for existing check, the name of the check should be used when adding names to the metrics list. The reserved metrics name 'overall' is used to record the overall result count metric.